AI-generated images are becoming more realistic and more widespread by the day, subverting our ability to trust the content we consume. Even subtle alterations—erased objects, modified timestamps, fabricated gestures—can significantly distort meaning. While this AI technology is certainly exciting, it’s hard to deny that it brings newfound risks that affect our everyday lives.

Trufo, a self-funded team of researchers from Princeton/Stanford/UChicago/NYU and engineers from Google/Netflix/Meta, has found a way to preserve content authenticity in the generative AI era. Concluding a year of R&D, they have built the first solution that actually works: an invisible cryptographic watermark that survives and describes image alterations.

Watermarks have been historically used for other purposes. “When people think of watermarks, they think of outdated tech that’s visible, forgeable, and generally irritating,” says Cindy Han, CEO of Trufo. “Ours is quite literally the opposite.”

“Google, Meta, and others have offered their own watermarks, but all of them have admitted that their watermarks are basically vulnerable to both forgery and removal,” says Jiaxin Guan, Assistant Professor at NYU and Chief Scientist of Trufo. “It’s very difficult to prevent removal. In Trufo’s case, we chose to make forgery extremely difficult—like cryptographically difficult.”

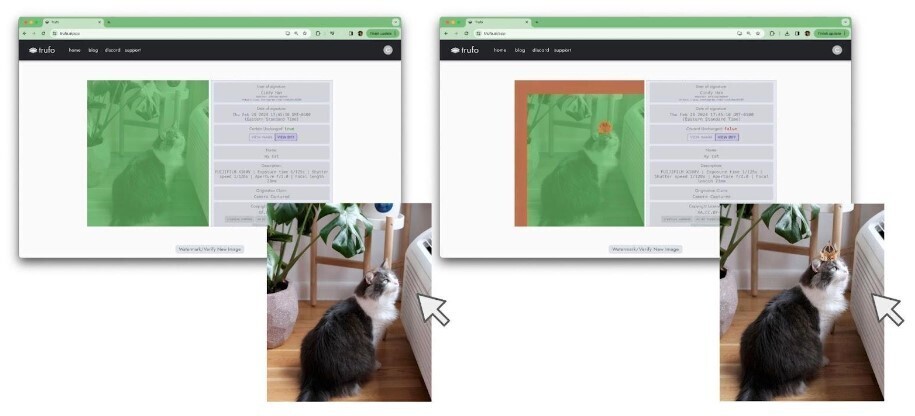

“Our watermark allows viewers to verify who created the image, whether it’s camera-captured or AI-generated,” says Han. “When the image is modified, the watermark can identify the alteration without needing a copy of the image. This has never been done before.”

Despite being a new player in the content authenticity space, Trufo has already assumed a leadership role developing open-source watermark standards within NIST’s AISIC.

Trufo is also integrated with C2PA, which means that users will get the popular metadata-based protocol for free.

“We are building a long-term solution,” says Guan. “But, there’s already immediate value: I can share my photos online knowing that if someone were to take those photos and manipulate them, those manipulations can be easily identified.”

These authentication capabilities, made possible by Trufo, will play a crucial role in mitigating negative AI risks, in political elections, celebrity images, photojournalism, copyright issues, and the day-to-day confidence of the average digital citizen.

“Getting the solution to work required incredible innovations, and we’re excited to invite users to test it out,” says Han. “You can’t take back your digital footprint, but you can take control of what you post from now on.”

A public beta, along with more information, can be found at https://trufo.ai.

For further information please contact Ian Friedman, VP of Business Development and Strategic Partnerships:

228 Park Ave S

PMB 87518

New York, NY 10003-1502 USA